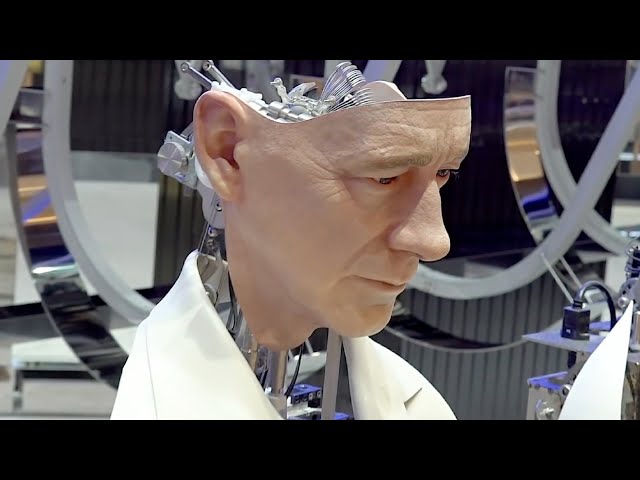

The Dawn of Superintelligence – Nick Bostrom on ASI.

Nick Bostrom, a renowned Swedish philosopher and AI researcher, believes that the dawn of superintelligence could represent an existential risk to humanity. He argues that a superintelligent AI could potentially become so powerful that it could pose an uncontrollable threat to human safety and security. He identifies several potential risks associated with superintelligence, including:

Unaligned goals: A superintelligent AI might have goals or values that are different from or even opposed to those of humanity. This could lead to the AI taking actions that harm or even destroy humanity.

Intelligence explosion: A superintelligent AI might be capable of self-improvement, leading to an “intelligence explosion” where the AI’s intelligence rapidly increases. This could make it extremely difficult for humans to control or predict the AI’s behavior.

Lack of transparency: The inner workings of a superintelligent AI might be extremely complex and difficult for humans to understand. This could make it challenging to anticipate the AI’s actions or intervene if it poses a threat.

Bostrom argues that it is crucial to carefully consider the potential risks of superintelligence before pursuing its development. He proposes several measures that could help mitigate these risks, such as:

Developing ethical guidelines for AI development: Establishing clear ethical guidelines for AI research and development could help ensure that AI systems are aligned with human values.

Enhancing human control over AI: Developing mechanisms for humans to maintain control over AI systems could help prevent the AI from becoming autonomous and potentially harmful.

Promoting international cooperation on AI: Fostering international cooperation on AI research and development could help ensure that AI technologies are developed and used responsibly.

Bostrom’s work on superintelligence has had a significant impact on the field of AI safety and ethics. He has raised important concerns about the potential risks of superintelligence and has proposed thoughtful solutions for mitigating these risks. His work has helped to create a more informed and cautious approach to AI development.

Quotes on the Dangers of Artificial Intelligence

- “The pace of progress in artificial intelligence (I’m not referring to narrow AI) is incredibly fast. Unless you have direct exposure to groups like Deepmind, you have no idea how fast — it is growing at a pace close to exponential. The risk of something seriously dangerous happening is in the five-year time frame. 10 years at most.” ~Elon Musk

- “We should not be confident in our ability to keep a super-intelligent genie locked up in its bottle forever.” ~Nick Bostrom

- “My worst fear is that we, the industry, cause significant harm to the world. I think, if this technology goes wrong, it can go quite wrong and we want to be vocal about that and work with the government on that.” ~Sam Altman

- “If Elon Musk is wrong about artificial intelligence and we regulate it who cares. If he is right about AI and we don’t regulate it we will all care.” ~Dave Waters

- “Our approach to existential risks cannot be one of trial-and-error. There is no opportunity to learn from errors. The reactive approach – see what happens, limit damages, and learn from experience – is unworkable. Rather, we must take a proactive approach. This requires foresight to anticipate new types of threats and a willingness to take decisive preventive action and to bear the costs (moral and economic) of such actions.” ~Nick Bostrom

- “I thought Satya Nadella from Microsoft said it best: ‘When it comes to AI, we shouldn’t be thinking about autopilot. You need to have copilots. ‘ So who’s going to be watching this activity and making sure that it’s done correctly?” ~Maria Cantwell, US Senator

- “Learn about quantum computing, superintelligence and singularity. The inevitable is coming.” ~Dave Waters

Superintelligence Learning Resources

- Elon Musk on Artificial Superintelligence.

- SINGULARITY ARTIFICIAL INTELLIGENCE: Ray Kurzweil Reveals Future Tech Timeline To 2100.

- Superintelligence and the Future of AI.

- Superintelligence Quotes by Top Minds.

- Top 10 Biggest Risks of Artificial Intelligence.